First of all, let’s get the elephant in the room out of the way; no new iPhone was announced. I was not necessarily expecting one to show up - that seems more suited to a September event, unless there were specific iOS features that were enabled by new hardware and that developers needed to know about.

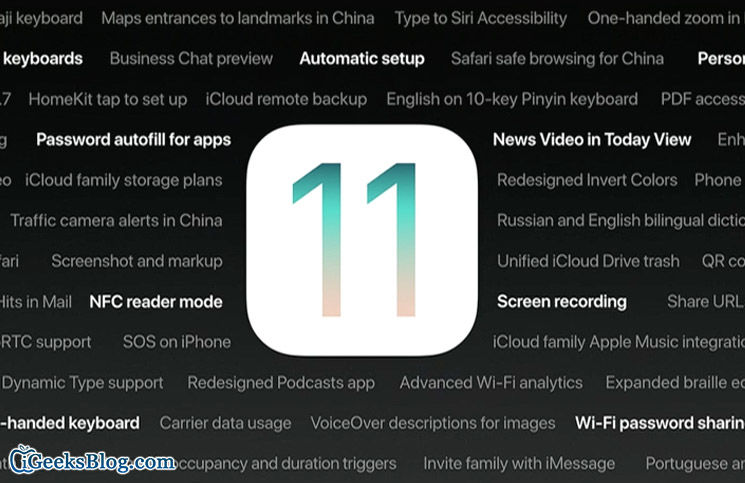

We did get a whole ton of new features for iOS 11 (it goes up to eleven!), but many of them were aimed squarely at the iPad. With no new iPhone, the iPad got most of the new product glory, sharing only with the iMac Pro and the HomePod (awful name, by the way).

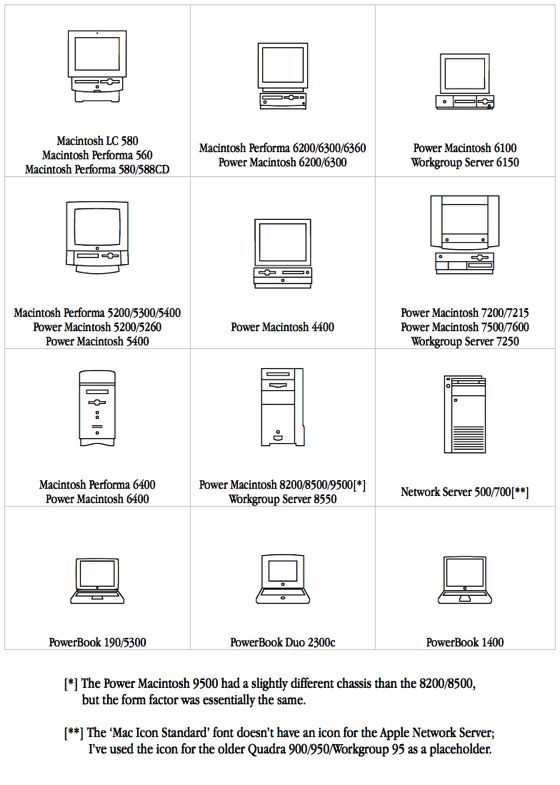

On that note, some people were confused by the iMac Pro, but Apple has helpfully clarified that there is also going to be a Mac Pro and external displays to go with it:

In addition to the new iMac Pro, Apple is working on a completely redesigned, next-generation Mac Pro architected for pro customers who need the highest-end, high-throughput system in a modular design, as well as a new high-end pro display.

I doubt I will ever buy a desktop Mac again, except possibly if Apple ever updates the Mac mini, so this is all kind of academic for me - although I really hope the dark-coloured wireless extended keyboard from the iMac Pro will also be available for standalone purchase.

What I am really excited about is the new 10.5" iPad Pro and the attendant features in iOS 111. The 12.9" is too big for my use case (lots of travel), and the 9.7" Pro always looked like a placeholder device to me. Now we have a full lineup, with the 9.7" non-Pro iPad significantly different from the 10.5" iPad Pro, and the 12.9" iPad Pro there for people who really need the larger size - or maybe just don’t travel with their iPad quite as much as I do.

My current iPad (an Air 2) is my main personal device apart from my iPhone. The MacBook Pro is my work device, and opening it up puts me in "work mode", which is not always a good thing. On the iPad, I do a ton of reading, but I also create a fair amount of content. The on-screen keyboard and various third-party soft-tip styluses (styli?) work fine, but they’re not ideal, and so I have lusted after an iPad Pro for a while now. However, between the lack of sufficient hardware differentiation compared to what I have2, and lack of software support for productivity, I never felt compelled to take the plunge.

Now, I can’t wait to get my hands on an iPad Pro 10.5".

I already use features like the sidebar and side-by-side multitasking, but what iOS 11 brings is an order of magnitude beyond - especially with the ability to drag & drop between applications. Right now, while I may build an outline of a document on my iPad, I rarely do the whole thing there, because it is just so painful to do any complex work involving multiple switches between applications - so I end up doing all of that on my Mac.

The problem is that there is a friction in working with a Mac; I need (or feel that I need) longer stretches of time and more work-like environments to pull out my Mac. That friction is completely absent with an iPad; I am perfectly happy to get it out if I have more than a minute or so to myself, and there is plenty of room to work on an iPad in settings (such as, to pick an example at random, an economy seat on a short-haul flight) where there is simply no room to type on a Mac.

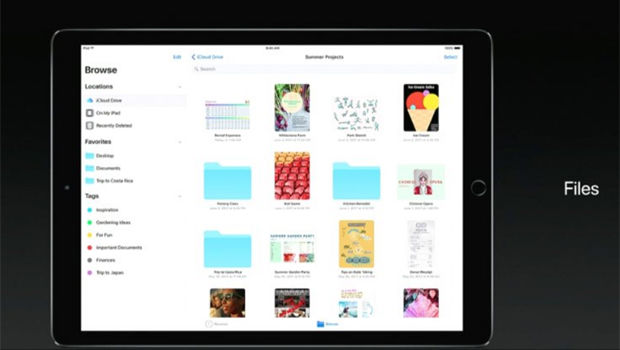

The new Files app also looks very promising. Sure, you can sort of do everything it does in a combination of iCloud Drive, Dropbox, and Google Drive, and I do - but I always find myself hunting around for the latest revision, and then turning to the share sheet to get whatever I need to where I can actually work on it.

With iOS 11, it looks like the iPad will truly start delivering on its promise as (all together now) a creation device, not just a consumption device.

Ask me again six months from now…

And if you want more exhaustive analysis, Federico Viticci has you covered.

-

Yes, there was also some talk about the Watch, but since I gave up on fitness tracking, I can't really see the point in that whole product line. That's not to say that it has no value, just that I don't see the value to me. It certainly seems to be the smartwatch to get if you want to get a smartwatch, but the problem with that proposition is that I don't particularly want any smartwatch. ↩

-

To me this is the explanation for the 13 straight quarters of iPad sales drop: an older iPad is still a very capable device, and outside of very specific use cases, or people upgrading from something like an iPad 2 or 3, there hasn’t been a compelling reason to upgrade - yet. For me at least, that compelling reason has arrived, with the combination of 10.5" iPad Pro and iOS 11. After the holiday quarter, I suppose we will find out how many people feel the same way. ↩