A perennial problem in tech is people building something that is undeniably cool, but is not a viable product. The most common definition of "viable" revolves around the size and accessibility of the target market, but there are other factors as well: sustainability, profitability, growth versus funding, and so on.

I am as vulnerable as the next tech guy to this disease, which is just one of many reasons why I stay firmly away from consumer tech. I know myself well enough to be aware that I would fall in love with something that is perfectly suited to my needs and desires — and therefore has a minuscule target market made up of me and a handful of other weirdos.

One of the factors that makes this a constant ongoing problem, as opposed to one that we as an industry can resolve and move on from, is that advancing tech continuously expands the frontiers of what is possible, but market positioning does not evolve in the same direction or at the same speed. If something simply can't be done, you won't even get to the "promising demo video on Kickstarter" stage. If on the other hand you can bodge together some components from the smartphone supply chain into something that at least looks like it sort of works, you might fool yourself and others into thinking you have a product on your hands.

The thing is, a product is a lot more than just the technology. There are a ton of very important questions that need to be answered — and answered very convincingly, with data to back up the answers — before you have an actual product. Here are some of the key questions:

- How many people will buy one?

- How much are they willing to pay?

- Given those two numbers, can we even manufacture our potential product at a cost that lets us turn a profit? If we have investors, what are their expectations for the size of that profit?

- Are there any regulations that would bar us from entering a market (geographical or otherwise)? How much would it cost to comply with those regulations? Are we still profitable after paying those costs?

- How are we planning to do customer acquisition? If we have a broad market and a low-cost product, we're going to want to blanket that segment with advertising and have as self-service a sales channel as possible. On the other hand, if we are going high-end and bespoke, we need an equally bespoke sales channel. Both options cost money, and they are largely mutually exclusive. And again, that cost comes out of our profit margin.

- What's the next step? Is this just a one-shot campaign, or do we have plans for a follow-on product, or an expansion to the product family?

- Who are our competitors? Do they set expectations for our potential customers?

- How might those competitors react? Can they lower their own prices enough that we have to reduce ours and erode our profit margin? Can they cross-promote with other products while we are stuck being a one-trick pony?

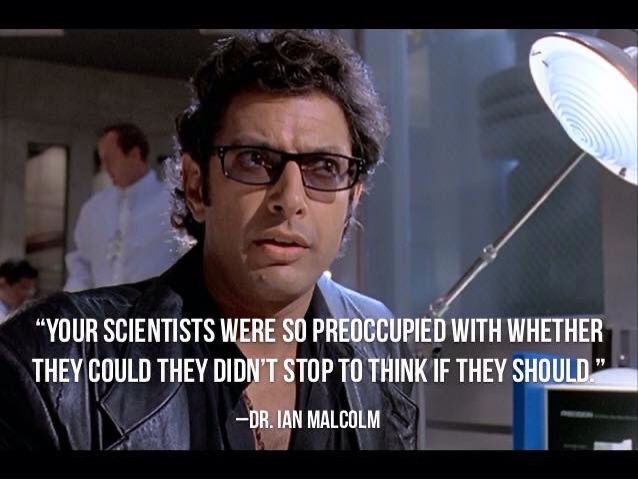

These are just some of the obvious questions, the ones that you should not move a single step forward without being able to answer. There are all sorts of second- and third-order follow-ups to these. Nevertheless, things-that-are-not-viable-products keep showing up, simply because they are possible and technically cool.

Possible, Just Not Viable

One example of how this process can play out would be Google Stadia (RIP). At the time of its launch, everyone was focused on technical feasibility:

[...] streaming games from datacenters like they’re Netflix titles has been unproven tech, and previous attempts have failed. And in places like the US with fixed ISP data caps, how would those hold up to 4-20 GB per hour data usage?

[...] there was one central question. Would it even work?

Some early reviewers did indeed find that the streaming performance was not up to scratch, but all the long-term reports I heard from people like James Whatley were that the streaming was not the problem:

The gamble was always: can Google get good at games faster than games can get good at streaming. And I guess we know (we always knew) the answer now. To be clear: the technology is genuinely fantastic but it was an innovation that is looking - now even more overtly - for a problem to solve.

As far as we can tell from the outside (and it will be fascinating to read the tell-all book when it comes out), Google fixated on the technical aspect of the problem. In fairness, they were and are almost uniquely well-placed to make the technology work that enables game streaming: data centers everywhere, fast network connections, and in-house expertise on low-latency data streaming. The part which apparently did not get sufficient attention was how to turn those technical capabilities into a product that would sell.

Manufacturing hardware is already not Google's strong suit. Sure, they make various phones and smart home devices, but they are bit-players in terms of volume, preferring to supply software to an ecosystem of OEMs. However, what really appears to have sunk Stadia is the pricing strategy. The combination of both a monthly subscription and having to buy individual games appears to have been a deal-killer, especially in the face of other streaming services from long-established players such as Microsoft or Sony which only charge a subscription fee.

To recap: Google built some legitimately very cool technology, but priced it in a way that made it unattractive to its target customers. Those customers were already well-served by established suppliers, who enjoyed positive reputations — as opposed to Google's reputation for killing services, one that has been further reinforced by the whole Stadia fiasco. Finally, there was no uniquely compelling reason to adopt Stadia — no exclusives, no special integration with other Google services, just "isn't it cool to play games streamed from the cloud instead of running on your local console?" Gamers already own consoles or game on their phones, especially the ones with the sort of fat broadband connection required to enable Stadia to work; there is not a massive untapped market to expand into here.

So much for Google. Can Facebook — sorry, Meta — do any better?

Open Questions In An Open World

Facebook rebranded as Meta to underline its commitment to a bright AR/VR future in the Metaverse (okay, and to jettison the increasingly stale and negative branding of the Blue App). The question is, will it work?

Early indications are not good: Meta’s flagship metaverse app is too buggy and employees are barely using it, says exec in charge. Always a sign of success when even the people building the thing can't find a reason to spend time with it. Then again, in fairness, the NYT reports that spending time in Meta's Horizon VR service was "surprisingly fun", so who knows.

The key point is that the issue with Meta is not one of technical feasibility. AR/VR are possible-ish today, and will undoubtedly get better soon. Better display tech, better battery life, and better bandwidth are all coming anyway, driven by the demands of the smartphone ecosystem, and all of that will also benefit the VR services. AR is probably a bit further out, except for industrial applications, due to the need for further miniaturisation if it's going to be accepted by users.

The relevant questions for Meta are not tech questions. Benedict Evans made the same point discussing Netflix:

As I look at discussions of Netflix today, all of the questions that matter are TV industry questions. How many shows, in what genres, at what quality level? What budgets? What do the stars earn? Do you go for awards or breadth? What happens when this incumbent pulls its shows? When and why would they give them back? How do you interact with Disney? These are not Silicon Valley questions - they’re LA and New York questions.

The same factors apply to Horizon. It's a given that Meta can build this thing; the tech exists or is already on the roadmap, and they have (or can easily buy) the infrastructure and expertise. The questions that remain are all "but why, tho" questions:

- Who will use Horizon? How many of these people exist?

- How will Horizon pay for itself? Subscriptions — in exchange for what value? Advertising — in what new formats?

- What's the plan for customer acquisition? Meta keeps trying to integrate its existing services, with unified messaging across Facebook, Instagram, and WhatsApp, but it doesn't really seem to be getting anywhere with consumers.

- Following on from that point, is any of this going to be profitable at Meta's scale? That qualification is important: to move the needle for Zuckerberg & co., this thing has to rope in hundreds of millions of users. It can't just hit a Kickstarter milestone and declare victory.

- What competitors are out there, and what expectations have they already set? If Valve failed to get traction with VR when everybody was locked down at home and there was a new VR-exclusive Half-Life game1, what does that say about the addressable market?

None of these are questions that can be answered based on technical capabilities. It doesn't matter how good the display tech in the headsets is, or whether engineers figure out how to give Horizon avatars innovative features such as, oh I don't know, legs. What matters is what people can do in Horizon that they can't do today, IRL or in Flatland. Nobody will don a VR headset to look at Instagram photos; that works better on a phone. And while some people will certainly try to become VR influencers, that is a specialised skill requiring a ton of support; it's not going to be every aspiring singer, model, or fitness instructor who is going to make that transition. Meta will need a clear and convincing answer that is not "what if work meetings but worse in every way".

So there you have it, one failed product and one that is still unproven, both cautionary tales of putting the tech before the actual product.