As I mentioned in my one-year review of my car, the one aftermarket upgrade I made was to swap the rather dated factory ICE for a CarPlay head unit. That modification is itself now about a year into its service, so it is also about due a review.

The reason for the upgrade is that the factory PCM 2.1 unit was really showing its age, with no USB, Bluetooth, or even Aux-in. In other words, Porsche were way ahead of Apple in removing the headphone jack… Courage!

This meant it was not possible to connect my phone to the car. Instead, I had a second SIM card which lived in the dash itself, and a curly-cord handset in the armrest between the front seats. Very retro, but not the most practical solution.

The worst part, though, was the near decade-old maps. While we do have some roads around here that are a couple of thousand years old, lots of them are quite a bit newer, and even on the Roman roads, it’s important to know about one-way systems and traffic restrictions.

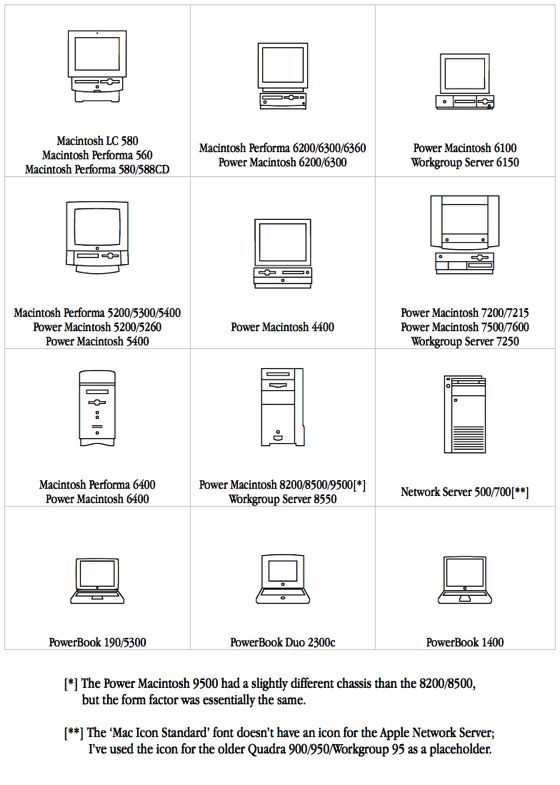

My solution for these problems was to swap the PCM 2.1 system for a head unit that is basically just a dumb screen driven by an iPhone, with no functionality of its own beyond a FM tuner. The reason is that I change phones much more frequently than I change cars, and upgrade the software on my phone more frequently than that.

The specific device is an Alpine ILX-007, and I am quite satisfied with it. It has a decent screen, which seems to be one of the key complaints people have about other CarPlay systems. There is occasionally a little lag, but I assume that’s software rather than hardware, since it’s not reproducible. It did crash on me once, losing my radio presets, but that’s it.

Upgrades

Adding this system to my car has been a substantial upgrade. I have all my music, podcasts and so on immediately available, I can make phone calls, and there is even a dedicated button to talk to Siri. I use this a lot to add reminders to myself while driving, as well as obvious stuff like calling people.

Siri also reads messages that come in while the phone is in CarPlay mode, which is occasionally hilarious when she tries to read something written in a language other than English. On the other hand Siri handles emoji pretty well, reading their name (e.g. "face blowing kisses"), which is very effective at getting the meaning across - although it’s a bit disconcerting the first time it happens!

Contrary to my early fears about CarPlay, it works perfectly with my steering-wheel controls too, so ergonomics are great.

The main win though is that my in-car entertainment now benefits from iOS upgrades in a big way. In particular, iOS 10 brings a redesigned Music screen and a major update to Maps.

Show me around

The Music screen used to have five tabs, which is way too many to navigate while driving. The new version has three tabs, and is generally much clearer to use. I don’t use Apple Music, and one of the things that I hated about the old version was that it would default to the Apple Music tab. The biggest reason why I don’t use streaming services like Apple Music is that the only time I really get to listen to music is while I’m out and about. That means either in aeroplanes, where connectivity is generally entirely absent, or in the car, where it is unreliable and expensive. Therefore, I only listen to music stored locally on my phone, but I had to switch away each and every time I launched the Music app. iOS 10 fixes that.

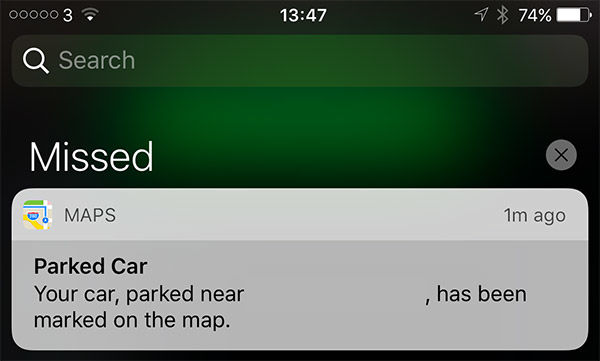

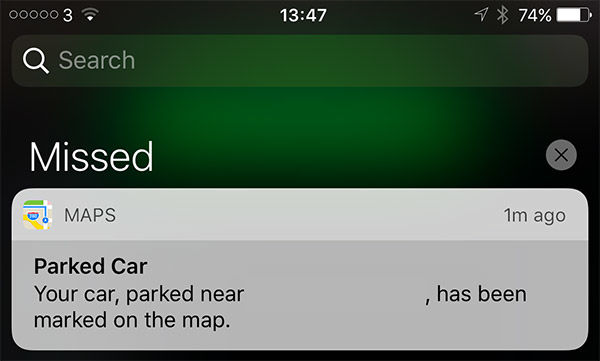

The biggest change iOS 10 brings to the CarPlay experience is to Maps. Many people have pointed out that Maps will now add a waypoint when the iPhone is disconnected from the car, so that drivers can easily retrace their steps to their parked car. I have to admit that I have never lost my car, but it’s good to know that it’s, say, ten minutes’ walk away when it’s raining.

There are also updated graphics, which are much clearer to read in a hurry. These are not just limited to pretty icons, though; there is actual improved functionality. Previously, users had to switch manually between separate Overview and Detail modes. Annoyingly, there was a significant gap between the greatest zoom on Overview and the widest area on Detail. Also, Detail did not include traffic alerts, while Overview by default showed the entire route, not just currently relevant parts, so a typical journey required a fair amount of switching back and forth between modes.

The new Maps zooms gradually over the course of the journey, always showing current position near one edge of the screen and destination near another edge. This is much more useful, allowing the driver to focus on alerts that are coming up rather than being distracted by ones that are already passed. There is also more intelligence about proposing alternate routes around congestion.

And yes, Maps works perfectly well for me, thank you. I would probably use it anyway given that, as the system-level mapping service, it plugs into everything, so I can quickly get directions to my next appointment from the calendar or go to a contact’s home or office address. The search could still be better, requiring very precise phrasing, but contrary to Maps’ reputation out there, landmarks generally exist and are in the correct place.

I am on record as an Apple Maps fan even in the early days, and it’s improved enormously since then. Don’t believe the hype, give it a go.

The integration is a big deal, as I saw last Wednesday. I was supposed to meet a colleague out and about, so I used Messages to send him my current location. To be extra sure, I chose the actual restaurant I was in, rather than just my GPS location. All my colleague needed to do was to tap on the location in the chat to be routed to my location. Unfortunately, he is one of those who prefer Google Maps, so he eyeballed the pin location and entered that in Google Maps. Unfortunately for him, the location he eyeballed turned out to correspond to a chain, and Google in its eagerness to give a result (any result) gave him the location of the nearest branch of that chain, rather than the specific location I was near.

It all worked out in the end, after a half-hour detour and a second taxi trip…

Trust the system, it works.

The System Works

This is exactly why I got a CarPlay unit in the first place: so I would get updates in the car more frequently than every few years when I get a whole new car. So far, that’s working out just perfectly. The iOS 10 upgrade cleaned up some annoyances and added convenient new features without requiring me to rip out all my dashboard wiring. I won’t consider another car without CarPlay support.