Conference season has started up again with a vengeance after the summer break. If you’ve ever staffed or attended a conference, you know that there is always a room (or a hallway, or an out-of-the-way closet) where sponsors can set up more or less elaborate booths and talk to attendees about their offerings.

Staffing a booth is a particular discipline, with significant variations depending on the intersection of which company you represent and which event you are at. Let’s go through some of the factors that go into deciding what goes in a booth – or not.

What is the goal of the sponsorship?

Depending on the company and the event, the goal of an event sponsorship can vary widely. Sometimes you might be there to scan literally every attendee’s badge and get their contact details so that you can follow up later. In this case, you want the flashy giveaway, the must-play game, and in general the fun, look-at-me booth. You also want to make sure that you can process people through pretty quickly; it’s a numbers game.

In other situations – different event audience, or different product and pitch on your part – that is exactly the opposite of what you want. You are aiming for a smaller number of longer and deeper conversations. The sorts of attendees you want will be turned off by queues or flashy displays, and may prefer a sit-down conversation to standing at a demo pod.

Make sure that both sales and marketing agree on the goals! I have personally been involved in events that Marketing considered a great success – "look at how many leads we generated!" – but Sales ignored as a waste of time – "those leads don’t convert". Have that conversation up front, because afterwards it’s too late.

Outside help

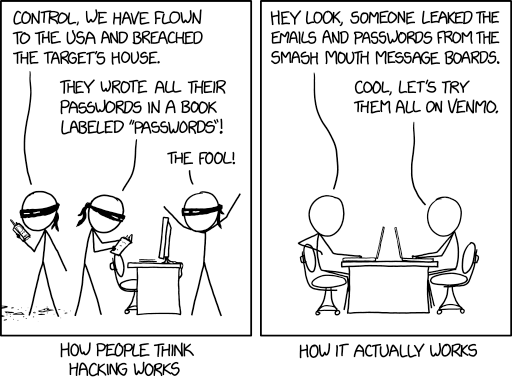

At many events, at least some of the booth staffers will be outside contractors, not employees of the company sponsoring the booth. A few years ago "contractor" would have been a euphemism for "booth babe", someone significantly younger than the average conference attendee, generally of the opposite sex to most of the attendees, and wearing significantly less clothing. This kind of contractor is there mainly as eye candy to attract passing traffic.

At least at the sort of conference I go to, the straight-up "booth babe" sort of thing has more or less completely died out – and good riddance to it. Even so, there are still a lot of contractors about, especially at larger events such as Mobile World Congress. They are there to give a pre-rehearsed short pitch and hand out collateral and swag, no more.

There is nothing inherently wrong with using outside help in this way, but it does influence what the typical attendee experience of your booth will be – and therefore what type of leads you will get.

Be in the room

If you’re working a booth, again, know what your goal is. If you want all the leads you can get, go stand out in the hallway with an armful of T-shirts or beer coozies or whatever your giveaway is, and scan everybody in sight. If you’re after more in-depth conversations, stay in your booth perimeter and wait for people to come to you.

Either way, don’t just hang out in the booth, playing with your phone or talking to your colleagues – and definitely don’t get out the laptop and try to work in the booth. You’re there to be available to attendees! If you need to do something urgently, step out of the booth, find a café or whatever, and work from there. There may be a sponsor lounge, or if you’re a speaker there is almost always some sort of green room with WiFi and coffee – and with any luck, a somewhat ergonomic table to work at.

Booth design matters

The booth design is also a factor, and it will change based on your company’s market profile, the event, and once again, your goal for the event. If your company is well-known enough that people will stop by just to see what you’re up to or grab the latest swag, your booth needs to be all about whatever is the newest thing you want to get out there. If you are a startup or a new entrant, you need something eye-catching that explains what your core value proposition is. Either way, keep it simple: nobody reads more than a handful of words on a booth, and they need to be able to do that from a distance, on the move, with a crush of people between them and you.

Different events may also need different designs. If you’re at, say, a Gartner event where most of the attendees are dressed formally, you need to be a bit more grown up too, both in wording and in presentation. Focus on business value and outcomes rather than tech buzzwords. On the other hand, if you’re at a tech-centric event where most people are wearing black T-shirts, you want that checklist, and your benefits need to be couched in technical terms too. This is literally a feeds & speeds crowd, and you should cater to that.

Collateral and handouts

Collateral is a hard one. I have long advocated doing away with take-home collateral entirely, and instead offering to email people about topics they care about – which is an excuse to have a conversation and uncover those topics! You might also consider a row of QR codes on a wall that people can scan to request particular items. This is both more ecological and more practical, since most printed collateral is never read.

However, in certain industries and regions people do actually want something to take away with them, so be aware of those preferences and make sure you cater to them.

The one piece of printed collateral I do like to have in a booth is an architecture diagram, because you can pick that up and use it as a visual aid in conversations with people, even if they never take it with them. In smaller situations I’ve also done this with a diagram printed on the wall or even a whiteboard in the booth, but when there are multiple people who might need to use the visual tool, it can get messy. Better to have one each!

I wrote down some more in-depth advice about conference collateral here.

Further reading

Those are my thoughts, but here are some more from Cote. There is some excellent advice here – do read it! You can sign up for his newsletter here – and if you like this sort of thing, his podcast is pretty good too.

🖼️ Photos by Jezael Melgoza and Cami Talpone on Unsplash