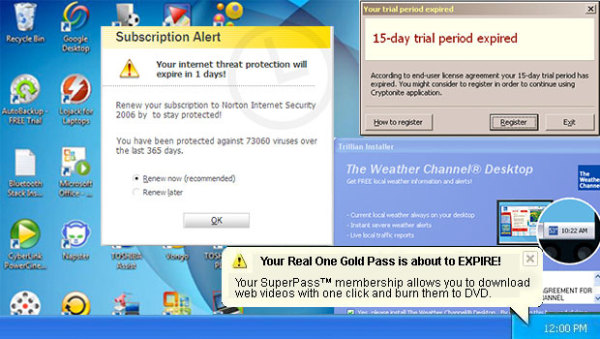

I wonder why it is that thin clients aren’t catching on. I mean, who wouldn’t want this user experience?

The photo seems to be a a valid summary of the rest of the review, too.

I wonder why it is that thin clients aren’t catching on. I mean, who wouldn’t want this user experience?

The photo seems to be a a valid summary of the rest of the review, too.

John Moltz points out that Apple has been doing wearables for over a decade:

Apple press release from Macworld 2003:

Burton Snowboards and Apple® today unveiled the limited-edition Burton Amp, the world’s first and only wearable electronic jacket with an integrated iPod™ control system.

Much like today’s wearables, it was a huge success. They sold literally dozens of them.

This wasn

’

t a one-off, either: [Ermenegildo Zegna did their own version](

http://www.engadget.com/2006/10/13/ermenegildo-zegnas-ipod-ready-ijacket/ "

Ermenegildo Zegna's iPod-ready iJacket

" ), much better looking and of course at eye-watering cost. I saw one of these in a shop, and it did look very good, but it cost more than my first car, so I passed.

Apple and their partners have had actual products in the market for a decade. Google shows a bunch of vapourware, and they get all the press without having to deliver anything…

All this is to say: wearable tech isn’t exactly new. My own experience: after spending a couple of years with activity trackers on my wrist, I have reverted to using my iPhone for that. I also like nice watches - almost the only form of jewellery men can wear - and don’t plan on giving up any of mine just to get notifications on yet another screen. I may be wrong, but in a world where watches and even jackets last longer than smartphones, I can’t see any reason for wearables to take off.

Okay, call me a huge snob - you won’t be the first - but mass adoption of computers has brought some downsides. Interfaces have all sorts of helpful features which are designed to assist people unfamiliar with computers to navigate them successfully. The trouble begins when those features get in the way of users who do know their way around - and there’s no way to turn them off.

One relatively recent example is the shared URL and search bar. Time was, all of a couple of years ago, browsers had one field where you could enter an address, and a different, separate field where you could enter a search. Because they were separate fields, both users and auto-completion algorithms understood which was which, and everything worked fine.

Then the Chrome developers decided that two fields was one too many, and they would rather everything went through Google anyway. That’s fine, that’s their prerogative. The problem is, all the other browsers followed them over the Cliff of Stupid, and got rid of the separate search field. It used to be that if I wanted to access a host on my local net, I could type "gandalf" in the field and get sent to http://gandalf. No longer! Now I get Google results for "gandalf". Whyyyyy

Another favourite, related to the previous item: Google auto-correct for search terms. Search for "bursty synonym"? Google asks "did you mean busty?". NO I DANG WELL DID NOT MEAN "BUSTY" - and thanks for getting that word into my search history!

Lest you think all my gripes are with Google, traditional desktop software such as Word is just as bad. I have complained before about its "intelligent" selection, which automatically expands the selection to the nearest whole word, regardless of whether that was what the user wanted to or not.

The logical end state of all this is that we pay extra to have all wizards and assistive features turned off - much as it now costs more to get T-shirts that don’t have stupid logos or slogans all over them.

If you follow tech news at all - and if not, why are you here, Mum? - you know that Microsoft finally got around to releasing Office for iPad.

Within hours of the launch, Word became the most downloaded application for iPads in Apple's app store.

The Excel and Powerpoint apps were the third and fourth most popular free app downloads, respectively, in the store.

Note that the apps themselves are free, but advanced functionalities - such as, for instance, editing a document - require an Office 365 subscription. A Home Premium subscription to Office 365 is $99 / £80 per year, which is a lot for home users. Fair enough, many Office users will presumably get the subscription through their employer, but many companies still don’t have subscriptions, so that is hardly a universal solution.1

In contrast, new iPads get the iWork apps for free, and even for older ones the price was hardly prohibitive - I think it was less than $10 per app when I bought them. Lest you think that the iWork apps are limited, I have successfully used Pages to exchange documents with Word, with change tracking too. Numbers also works well with Excel files, including some pretty detailed models. Keynote falls down a bit, mainly because the iPad is lacking some fonts, but a small amount of fiddling can usually sort that out too. I would assume that the fonts issue will bite PowerPoint on the iPad too, anyway.

The main thing though is that Office on the iPad is just too little, too late. Microsoft should have released this at least two years ago. By then it was clear that the iPad was the tablet in business. Far from the lack of Office killing the iPad, the lack of iPad support seriously undermined Office!

Anyway, I will probably never even download it, despite being an Office power user2 on my Mac. I think it will do okay, simply because of the critical mass of Office users that still exists, but Microsoft missed their chance to own the iOS productivity market the way they own that market on PCs.

A more detailed treatment of the pricing issue:

Apple makes their money on hardware sales. Therefore, they can give away iWork for iOS by baking its development costs into the overall iOS development costs.

Google makes their money on targeted advertising. Therefore, they can give away Google Drive because they’re scraping documents and tailoring ad content as a result. That’s pretty creepy, and might be against your employer’s best practices for confidentiality of information.

Microsoft doesn’t make money on iPad hardware sales, nor do they scrape Office documents for ads. Therefore, they charge you money to use their software beyond the basics. Makes sense to me.

Makes sense to me too.

Of course Microsoft may still make more money on Office this way by avoiding rampant piracy on the PC side. The question then becomes: what does this do to their market share? Part of the ubiquity of Microsoft was driven by wholesale piracy, especially among home users. ↩

Well, Word and PowerPoint, at least. Us marketing types don’t use much Excel, as a rule. ↩

Yesterday I wrote about how the value of private cloud is enabled by past inefficiencies: Hunting the Elusive Private Cloud. There is another side to that coin that's worth looking at. Vendors - especially, but not only, hardware vendors - made handsome profits catering to that inefficiency. If your datacenter utilisation rate is below 10%, then 90%+ of your hardware spending is… well, not quite wasted, but there are possible improvements there.

A decade or so ago, all the buzz was about virtualisation. Instead of running one physical server with low utilisation, we would put a number of virtual servers on the same bit of physical kit, and save money! Of course that’s not how it worked out, because the ease of creating new virtual servers meant that the things started multiplying like bunnies, and the poor sysadmins found themselves worse off, with even more servers to manage instead of fewer!

Now the promise of private cloud is that all the spare capacity can finally be put to good use. But what does this mean for the vendors who were relying on pushing all that extra tin?

Well, we don’t know yet. Most private cloud projects are, frankly, still at a very low level of maturity, so the impact on hardware sales is limited so far. One interesting indicator, though, is what happens as public cloud adoption ramps up.

Michael Coté, of 451 Research, flagged this (emphasis mine):

Buried in this piece on Cisco doing some public cloud stuff is this little description about how the shift to public cloud creates a strategic threat to incumbent vendors:

Cloud computing represented an interesting opportunity to equipment companies like Cisco, as it aggregated the market down to fewer buyers. There are approximately 1,500 to 2,000 infrastructure providers worldwide verses millions of businesses; reducing the buyers to a handful would lower the cost of sales. And, as cloud sales picked up, reducing on-premises equipment spending, those providers would represent an increasing share of sales and revenue.

The problem with this strategy, as companies like Cisco Systems and Juniper Networks discovered, is the exchange of on-premises buyers to cloud buyers is not one to one. Cloud providers can scale investments further than any individual enterprise or business buyer, resulting in a lower need for continually adding equipment. This phenomenon is seen in server sales, which saw unit shipments fall 6 percent last year and value fall nearly twice as fast.

Even if we assume that a company offloading some servers to the public cloud instead of buying or replacing them in its own datacenter is doing so on a 1:1 basis - one in-house physical server replaced by one virtual server in the public cloud - the economics mean that the replacement will be less profitable for the equipment vendor. The public cloud provider will be able to negotiate a much better price per server because of their extremely high purchasing volume - and this doesn’t even consider the mega-players in cloud, who build their own kit from scratch.

Since I mentioned Cisco, though, I should point out that they seem to be weathering the transition better than most. According to Forrester’s Richard Fichera, Cisco UCS at five years is doing just fine:

HP is still number one in blade server units and revenue, but Cisco appears to be now number two in blades, and closing in on number three world-wide in server sales as well. The numbers are impressive:

32,000 net new customers in five years, with 14,000 repeat customers

Claimed $2 Billion+ annual run-rate

Order growth rate claimed in "mid-30s" range, probably about three times the growth rate of any competing product line.

To me, it looks like the UCS server approach of very high memory density works very well for customers who aren’t at the level of rolling their own servers, but have outgrown traditional architectures. Let’s see what the next five years bring.

While I work for a cloud management vendor, the following represents my personal opinion - which is why it’s published here and not at my work blog.

It seems that in IT we spend a lot of time re-fighting the same battles. The current example is "private cloud is not a cloud".

Some might expect me to disagree, but in fact I think there’s more than a grain of truth in that assertion. The problem is in the definition of what is a cloud in the first place.

If I may quote the NIST definition yet again: (revs up motorcycle, lines up on shark tank)

On-demand self-service. A consumer can unilaterally provision computing capabilities, such as server time and network storage, as needed automatically without requiring human interaction with each service provider.

Broad network access. Capabilities are available over the network and accessed through standard mechanisms that promote use by heterogeneous thin or thick client platforms (e.g., mobile phones, tablets, laptops, and workstations).Resource pooling. The provider’s computing resources are pooled to serve multiple consumers using a multi-tenant model, with different physical and virtual resources dynamically assigned and reassigned according to consumer demand. There is a sense of location independence in that the customer generally has no control or knowledge over the exact location of the provided resources but may be able to specify location at a higher level of abstraction (e.g., country, state, or datacenter). Examples of resources include storage, processing, memory, and network bandwidth.

Rapid elasticity. Capabilities can be elastically provisioned and released, in some cases automatically, to scale rapidly outward and inward commensurate with demand. To the consumer, the capabilities available for provisioning often appear to be unlimited and can be appropriated in any quantity at any time.

Measured service. Cloud systems automatically control and optimize resource use by leveraging a metering capability at some level of abstraction appropriate to the type of service (e.g., storage, processing, bandwidth, and active user accounts). Resource usage can be monitored, controlled, and reported, providing transparency for both the provider and consumer of the utilized service.

The item most people point to when making the claim that "private cloud is not a cloud" is the fourth in that list: elasticity. Public clouds have effectively infinite elasticity for any single tenant: even Amazon itself cannot saturate AWS. By definition, private cloud does not have infinite elasticity, being constrained to whatever the capacity of the existing datacenter is.

So it’s proved then? Private cloud is indeed not a cloud?

Not so fast. There are two very different types of cloud user. If you and your buddies founded a startup last week, and your entire IT estate is made up bestickered MacBooks, there is very little point in looking at building a private cloud from scratch. At least while you are getting started and figuring out your usage patterns, public cloud is perfect.

However, what if you are, say, a big bank, with half a century’s worth of legacy IT sitting around? It’s all very well to say "shut down your data centre, move it all to the cloud", but these customers still have mainframes. They’re not shuttering their data centres any time soon, even if all the compliance questions can be answered.

The reason this type of organisation might want to look at private cloud is that there’s a good chance that a substantial proportion of that legacy infrastructure is under- or even entirely un-used. Some studies I’ve seen even show average utilisation below 10%! This is where they get their elasticity: between the measured service and the resource pooling, they get a much better handle on what that infrastructure is currently used for. Over time, private cloud users can then bring their average utilisation way up, while also increasing customer satisfaction.

Each organisation will have its own utilisation target, although 100% utilisation is unlikely for a number of reasons. In the same way, each organisation will have its own answer as to what to do next: whether to invest in additional data centre capacity for their private cloud, or to add public cloud resources to the mix in a hybrid model.

The point remains though that private cloud is unquestionably "real" and a viable option for these types of customers. Having holy wars about it among the clouderati is entertaining, but ultimately unhelpful.

I have commented before about how Android handset advertising resembles the worst sort of PC advertising.

Now it seems Samsung has gone all the way and infests their phones with crapware - I mean, "valuable software pre-installed for your convenience": Samsung Galaxy S5 Comes With Premium App Subscriptions.

Whoever at Samsung thought this was a good idea should be used as target practice for the company’s autonomous guard-bots (no, really, those are a thing). One of the factors driving people away from Windows is the fact that most "civilian" installations come loaded down with all sorts of horrors: unkillable system tray cruft, toolbars cluttering up every window, a brace of expired trials for vapourware security products, and various broken installs of different versions of antivirus tools. Corporate Windows images are not much better, with encryption watchdogs, twenty passwords required, and disk audits and mandatory reboots happening at the most inconvenient times.1

Faced with this sort of user experience, most people run away screaming - often to Apple. Now Samsung wants to bring this same dynamic to mobile?

Android purists will no doubt point out that it possible to install clean Android here. In the real world, I suspect that about as many people will do this as already reinstall clean Windows to their consumer boxes - i.e. only us weirdos. ↩

No, it’s not what you think… Here "unicorns" are guru-level sysadmins, difficult to find in the wild when you really need them.1

The Register says:

The rise of the cloud is wiping out the next generation of valuable sysadmins as startups never learn about how to manage data-center gear properly

The thesis goes like this: in the old, pre-AWS days (can you believe that’s only eight years ago?) you and your friends would start a company and code up a demo on your be-stickered MacBooks at the local independent coffee shop. Once you had your demo, you would take it to VCs in order to get funding to buy some servers, host them in a data center somewhere, and start building your infrastructure. This would require professional sysadmins who would gain experience taking a tool to production scale, and so there was good availability of sysadmins who had that experience.

Nowadays, those be-stickered MacBooks might represent the entire IT estate of a startup. Everything else runs in the cloud somewhere - probably in AWS. Now this is great from some points of view: by definition, startups don’t know what their adoption rate is going to be, so having AWS’ effectively infinite pool of capacity to fall back on is very useful in case they get Slashdotted2 one day. Vice versa, they don’t get stuck paying the bills for an oversized data center if take-off turns out to require a bit more time than they had initially hoped.

So far, so good. The problem is when that startup’s business has more or less stabilised, and they would like to move the more predictable parts of their infrastructure from expensive AWS3 to more traditional infrastructure. They do not have the skills in-house to do this because they have never needed to develop them, so they need to hire sysadmins in a hurry.

This pattern has played out at numerous startups, including ephemeral messaging service Snapchat which started life on Google App Engine and then hired away senior Google cloud employee Peter Magnusson to help it figure out how to move bits of its workload off the cloud.

Similarly, backend-as-a-service company Firebase started out life on a major public cloud but had to shift to bare-metal servers after it ran into performance issues.

Now, if you’re in the position to hire senior Google employees in a hurry, then your startup is doing well and I congratulate you. However, what about all the other startups without the sort of public profile that Snapchat enjoys?

Draining the sysadmin talent pool is a real problem because there is no straight career path to get there. Sysadmins have be talented generalists, able to work at different levels of abstractions, pivot rapidly in response to changing technological realities, and react quickly to tactical requests while working towards strategic objectives. You can’t get a degree in any of that, you need to learn by doing. I know, I’ve been there, done that, got the T-shirt4. I caused my mentors a certain number of headaches during the learning process, but I came out of it with valuable experience. Ultimately my poor timing meant that the bubble burst, sysadmins jobs were in short supply, and I had to take my career in different directions, although a certain generalist/tinkerer aspect has been a constant.

Also, sysadmin knowledge is pretty specific. Even if you are able to find a Linux guru who has done it all, partied with Linus and argued ESR to a standstill, it will still take some time to get them hired and up to speed in your environment. Congratulations: now your inability to hire and onboard sysadmins more rapidly is a bottleneck on your business growth. A slowdown in growth is NOT what you want in a startup. You want a nice steep growth curve, with no interruptions or even temporary flattenings.

I suspect many business models include an unspoken assumption of "and then we’ll open our own datacenter". Not many people appear to be thinking about the business risk involved in that step, especially the human factors.

Note: I am absolutely not advocating staying away from the public cloud! If a workload is bursty and unpredictable, as most startups are by definition, the public cloud is a very good fit. The same applies to traditional enterprises operating services with those characteristics - even if they have some cultural obstacles to adopting the cloud. On the other hand, a static repository of large binaries, say, or a service with extensive compliance requirements, may well be better off on more traditional infrastructure.

If the premise of the article is true, and traditional sysadmins skills are becoming more rare, it may be tougher than expected to transition to a traditional on-premise or managed-hosting model. Business plans and product roadmaps will need to allow for both the technical aspects and the business risk aspects.

If you are lucky enough to have generalists around, don’t try to pigeonhole them. Look after your sysadmins, and they’ll look after you.

In one of those displays of serendipity, I just finished reading this article over my lunch break. It seems the problem is not limited to IT, but is generalised throughout the economy.

A generalised problem would seem to be harder to solve, but in IT we pride ourselves on being able to break these sorts of deadlocks. What is the solution that combines short-term benefits with long-term ones to the detriment of neither?

This is a mature, professional and non-discriminating blog, so nobody will make any jokes about hunting both sysadmins and unicorns using young nubile women as bait. ↩

I know, showing my age there! ↩

I have a vague memory of a study showing AWS (as a proxy for public cloud in general) could be as much as 25% more expensive over time for certain types of steady-state workloads once you factor in bandwidth. However, I can’t lay my hands on it right now. If I find it, I will update this post. ↩

Black, of course, and emblazoned with the following slogan: select * from USERS where CLUE > 0; 0 rows returned ↩

Latest pointless tech spotted in the wild: wireless charging points. Of course everybody is ignoring them in favour of good old sockets.

It doesn't help that the wireless charging appears to require some sort of weird dongle that you have to go somewhere else to get, surrendering photo ID for the privilege.

What, they couldn't use NFC? /snark

Today at the Geneva International Motor Show, Apple announced CarPlay, with is the new name for "iOS in the car". It looks great!

They are pitching it as "a Smarter, Safer & More Fun Way to Use iPhone in the Car", and I love the concept. We upgrade our phones every year or two, but our cars much less frequently than that. In-car entertainment systems are limited by the automotive industry’s product cycles, so they are basically already obsolete (in consumer terms) by the time the car hits the showroom. Enabling cars to piggy-back on the smart, GPS-navigating, voice-recognising, music-playing computers that we already carry in our pockets can only be a good thing.

If you take a look at a video of CarPlay in action, though, I see one huge issue.

Touchscreens in cars are a terrible idea. Old-school controls gave drivers haptic feedback: if you turn the dial one notch, you feel the click, and you get the next radio station, or one increment of temperature, or whatever it is. This is something drivers can do completely blind, without taking their eyes off the road.

It’s been at least a decade since cars could accommodate physical controls for all of their functions, so multi-function control systems like iDrive, Comand or MMI were introduced to help with the plethora of systems and settings that modern cars require. All of these systems allow users to navigate hierarchical menu trees to control in-car entertainment, navigation, and vehicle settings.

These systems are still better than touchscreens, though, because at least the driver’s hand rests on the one control, and that control still has tactile increments to it. The haptic feedback from those clicks is enough for seasoned users to be able to navigate the menu tree with only occasional glances at the screen - very important at highway speeds.

Touchscreens are terrible for non-visual feedback. Users have no idea, short of looking at the screen, whether their action achieved the result they wanted or not.

Apple’s suggestion of using Siri is not much of a fix. I like Siri and use the feature a lot in my car to dictate e-mails or messages, but it depends entirely on network access. Out of major cities - you know, where I take my car - network access is often insufficient to use Siri with any reliability, so drivers will almost certainly need to use the touchscreen as well.

I really hope that CarPlay also works with steering-wheel mounted controls, as those allow control with an absolute minimum of interruption. If we could have audio feedback that did not require network access, using the existing Accessibility text-to-speech functionality in iOS, that would be perfect.