A machine learning algorithm walked into a bar.

The bartender asked, "What would you like to drink?"

The algorithm replied, "What’s everyone else having?"

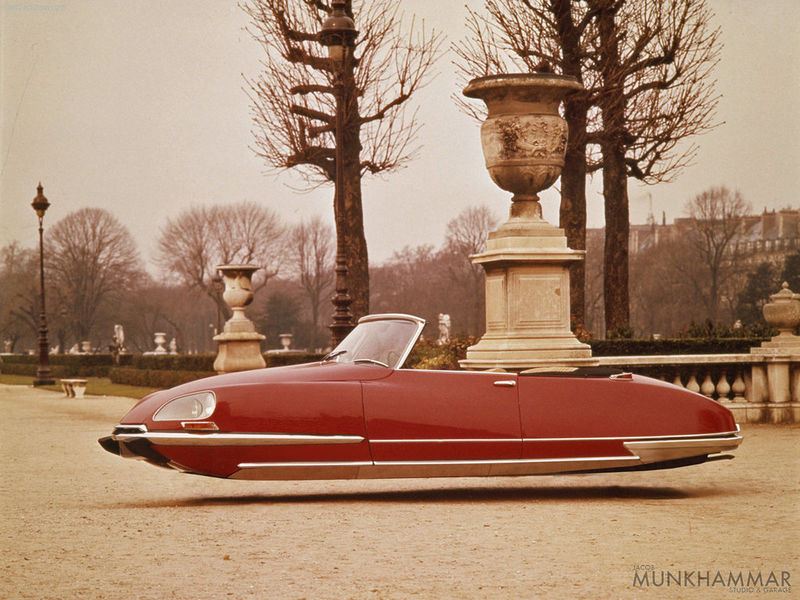

Citroën DS 19, at Museo dell’Automobile in Turin.

The Déesse has long been one of my dream cars, but I can’t do it justice in words. Fortunately, Roland Barthes did it for me:

La nouvelle Citroën tombe manifestement du ciel dans la mesure où elle se présente d’abord comme un objet superlatif. […] La «Déesse» a tous les caractères (du moins le public commence-t-il par les lui prêter unanimement) d'un de ces objets descendus d’un autre univers, qui ont alimenté la néomanie du XVIIIe siècle et celle de notre science-fiction: la Déesse est d'abord un nouveau Nautilus.

I don’t know whether Renaud Marion had this maquette in mind when he came up with his Air Drive series, but it seems probable. Unfortunately, "Air Drive" did not include a DS, but Jacob Munkhammar has stepped up to fill that gap.

There’s a dealership I’d love to visit! I wonder whether that’s the one where they got the flying DS taxi for Back To The Future II?

Well, the EU has come out and said what we all knew already: Uber is a taxi firm, not a tech firm.

This is of a place with the decision by Transport for London to revoke Uber’s license to operate, which is currently under appeal. There are a few important things to say about the EU result in particular.

First of all, there is a distinction to be made between Uber the service and Uber the company. The service is incredibly convenient, and has in many ways completely superseded traditional taxi services, especially when travelling. Instead of a myriad of taxi firm numbers for each city that I visit regularly, and the requirement upon arrival to make sure I have enough local currency to get wherever I need to go, I can just whip out my phone as soon as I’ve cleared customs, and usually arrive at the meeting spot around the same time as the car.

When I reach the destination, I simply get out of the car and walk away, and my receipt is emailed to me automatically. This is pretty much the Platonic ideal of a taxi service.

Uber the company - well, that is a different story. From untold counts of sexual harassment (which are now to be made into a movie), to active evasion of regulators in the Greyball programme, to the actual matter of this case - claiming that their drivers are "independent contractors", rather than employees - this is not a company that anyone should support.

Sure, capitalism is always pretty red in tooth and claw, and the various regulators that Uber has been doing battle with have hardly covered themselves in glory over the years. The taxi drivers trying to present themselves as innocents who have been hard done by could have implemented something similar to Uber years ago, but preferred to continue operating vehicles that were hazards to safety and hygiene, claim that the credit card machine was perennially out of order, refuse to provide tax receipts, and offer to fill in vastly inflated amounts on an expense slip as compensation.

There is also an argument that Uber increased provision of transportation services in previously under-served areas, and even provide a cheaper way to get to hospital than an ambulance1.

All of this is true, but we cannot overlook how these results were achieved.

Remember, too, that what we have seen until now is Nice Uber. This is Uber still operating in a mode where they are willing to burn their investors’ capital to capture market share, funding up to 40% of the cost of a ride. Assuming that they are successful in capturing a monopoly, that subsidy would presumably end shortly afterwards, and in order to keep fees to riders down, the payment to drivers would surely take a hit. Nasty Uber looking to monetise its monopoly and cut its stupendous losses ($2.8B last year alone) would surely start cutting corners elsewhere, too.

This ruling brings to light once more a common tech fallacy. The tech world runs on codes. Success can be had by operating precisely within the codes, or by finding ingenious loopholes that let you do something unexpected. Confusion sometimes occurs because the law is also a code. Some techies misinterpret the code of law as being similar to the codes and specifications that they are used to, and think that they have identified a cunning loophole that nobody else has thought about and which allows them to route around some provision of law that they do not like. For instance, they might decide to start a taxi firm, except - aha! - the drivers are not employees, but "independent contractors" who operate their own vehicles (which are in turn leased from the totally-not-their-employer taxi firm). And the best part is, it all follows the letter of the law!

The thing is, computer code is not at all the same as a code of law. It is true that regulation moves slowly and can become outdated, and there is significant abuse because of that. In Italy, for instance, the German low-cost bus booking app Flixbus is in trouble with the law (link in Italian) because incumbents have managed to get laws proposed that would ban bus companies from operating unless they own their vehicles. That requirement is obviously a problem for Flixbus, a booking platform that aggregates across multiple bus operating companies, but does not actually run any buses itself.

This is precisely the sort of situation that the "app economy" is supposed to disrupt: instead of a Balkanised patchwork of bus companies, have a single multinational app platform through which passengers can book trips in more comfort - although perhaps at the expense of a comfortable status quo for incumbent local bus operators.

This is how Uber would like to present itself too - as plucky David taking on established Goliath, or as an underdog going up against The Man, who is also hand in hand with City Hall. However, there is a fine line between opposing laws and regulations, and working actively to evade them. In the latter situation, once the law does catch up, it will shut down whatever loopholes were being used, and then the consequences are far more grave than if a technical loophole were closed through a software update. Not only does the cunning hack to the law code no longer work, but the would-be law hacker may now find themselves subject to penalties far more draconian than for violating the GPL.

Lately American firms have been unpleasantly surprised to find that the EU’s bark does in fact have a bite behind it. They had generally ignored the EU as being toothless and slow-moving, but activists and politicians have goaded the behemoth into motion, and the $2.4 billion fine against Google was only the start. Margrethe Vestrager, the current European Commissioner for Competition, is outspoken and determined to enforce a particularly European vision of competition. Given that Neelie Kroes is now on Uber’s Public Policy Advisory Board, perhaps this is a sign that Uber’s leadership recognise this fact, and will move with more tact from now on.

Between France 🇫🇷 and Germany 🇩🇪, Europe's getting a bit hot for Facebook!

— Dominic 🇪🇺🇺🇦🏳️🌈 (@dwellington) December 19, 2017

France orders WhatsApp to stop sharing user data with Facebook without consent https://t.co/EuC3m9IOEa

German antitrust watchdog warns Facebook over data collection https://t.co/vdUYszciMm#privacy pic.twitter.com/HnNvewGrIY

It is true that getting a taxi used to be a hassle and now it’s mostly not, and that fact is largely thanks to Uber - but we cannot simply accept Uber's culture of "ask for forgiveness rather than permission - in fact, forget forgiveness, we’ve done it and that’s that". I hope the service can continue while the company is reformed under new leadership, but that will have to be done without this figleaf that Uber is a technology firm or part of the sharing economy or whatever. It’s a taxi firm, it owns vehicles and employs drivers, and it had better apply its substantial and undoubted ingenuity to working out what that means.

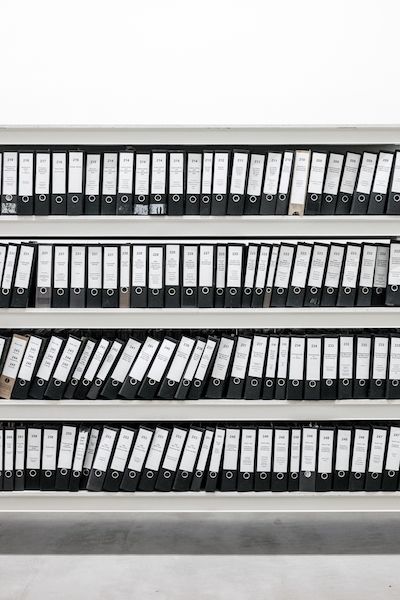

Photos by Peter Kasprzyk and Samuel Zeller on Unsplash.

As a European, this story has any amount of WTF to it. Sorry, America, but your healthcare industry is utterly bananas, and you need to fix it. Single-payer systems have their flaws too, but they absolutely beat the alternative. ↩

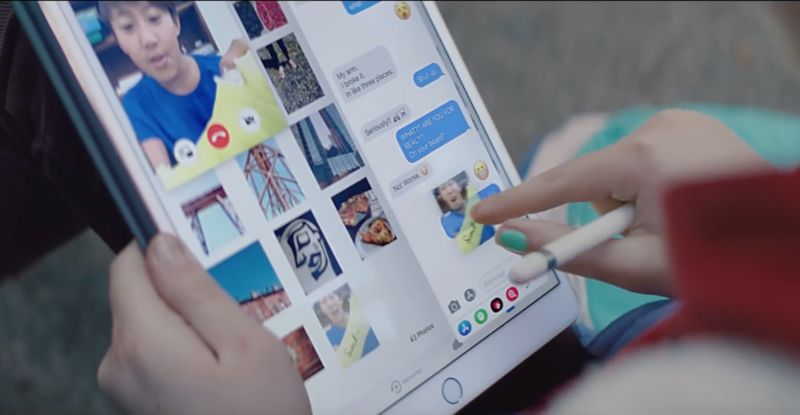

So there’s an Apple ad for the iPad Pro out there, which is titled "What’s a computer?". It’s embedded here, in case you’re like me and don’t see ads on TV.

Uh oh, it looks like your embed code is broken.

tl;dr is that the video follows a young girl around as she does various things using her iPad Pro, signing a friend’s cast over FaceTime and sending a picture of it via Messages and so on.

It’s all very cute and it highlights the capabilities of the iPad Pro (and of iOS 11) very well.

However, there is a hidden subtext here, that only young people who grow up knowing only phones and tablets will come to think of them as their only devices in this way. Certainly it’s true of my kids; I no longer have any desktop computers in the house, so they have never seen one. There is a mac Mini media server, but it runs headless in a cupboard, so it hardly looks like a "computer". My wife and I have MacBooks, but they’re our work machines. My personal device is my iPad Pro.

My son actually just started computing classes in school this year, and was somewhat bemused to be faced with an external keyboard and mouse. At least they’ve moved on from CRTs since my day…

There is another group of users who have adopted the iPad enthusiastically, and that is older people. My mother used to invite me for lunch, and then casually mention that she "had some emails" for me to do. She would sit across the room from the computer and dictate to me, because she never felt comfortable doing anything on the infernal machine herself.

Since she got her first iPad a few years ago, she has not looked back. She is now a regular emailer – using the on-screen keyboard, no less, as I have not been able to persuade her to spring for a Pro yet. She surfs the web, comments on pictures of her grandchildren, keeps up with distant friends via Skype and Facebook, and even plays Sudoku.

That last point is particularly significant, as for people who grew up long before computers in homes, it is a major shift to embrace the frivolous nature of some (most?) of what we do on these devices.

None of this is to say that I disagree with Apple’s thesis in the ad. When it comes to computers, my own children only really know iPads first-hand. They see adults using laptops occasionally, and of course spending too much time on their phones, but they don’t get to use either of those devices themselves. As far as they are concerned, "computer" might as well mean "iPad".

I just think that they should do a Volume Two of that ad, featuring older people, and perhaps emphasising slightly different features - zoomed text, for instance, VoiceOver, or the many other assistive technologies built into iOS. Many older people are enthusiastic iPad users, but are not naturally inclined to upgrade, and so may still be using an iPad 2 or an original iPad mini. A campaign to showcase the benefits of the Pro could well get more of these users to upgrade - and that’s a win for everyone.

AI and machine-learning (ML) are the hot topic of the day. As is usually the case when something is on the way up to the Peak of Inflated Expectations, wild proclamations abound of how this technology is going to either doom or save us all. Going on past experience, the results will probably be more mundane – it will be useful in some situations, less so in others, and may be harmful where actively misused or negligently implemented. However, it can be hard to see that stable future from inside the whirlwind.

Uhoh, This content has sprouted legs and trotted off.

In that vein, I was reading an interesting article which gets a lot right, but falls down by conflating two issues which, while related, should remain distinct.

there’s a core problem with this technology, whether it’s being used in social media or for the Mars rover: The programmers that built it don’t know why AI makes one decision over another.

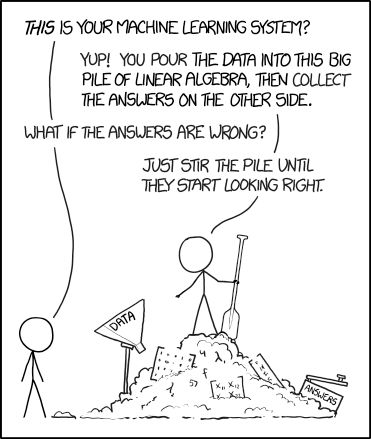

The black-box nature of AI comes with the territory. The whole point is that, instead of having to write extensive sets of deterministic rules (IF this THEN that ELSE whatever) to cover every possible contingency, you feed data to the system and get results back. Instead of building rules, you train the system by telling it which results are good and which are not, until it starts being able to identify good results on its own.

This is great, as developing those rules is time-consuming and not exactly riveting, and maintaining them over time is even worse. There is a downside, though, in that rules are easy to debug. If you want to know why something happened, you can step through execution one instruction at a time, set breakpoints so that you can dig into what is going on at a precise moment in time, and generally have a good mechanical understanding of how the system works - or how it is failing.

I spend a fair amount of my time at work dealing with prospective customers of our own machine-learning solution. There are two common objections I hear, which fall at opposite ends of the same spectrum, but both illustrate just how different users find these new techniques.

Yes, there is an XKCD for every occasion

The first group of doubters ask to "see the machine learning". Whatever results are presented are dismissed as "just statistics". This is a common problem in AI research, where there is a general public perception of a lack of progress over the last fifty years. It is certainly true that some of the overly-optimistic predictions by the likes of Marvin Minsky have not worked out in practice, but there have been a number of successes over the years. The problem is that each time, the definition of AI has been updated to exclude the recent achievement.

Something of the calibre of Siri or Alexa would absolutely have been considered AI, but now their failure to understand exactly what is meant in every situation is considered to mean that they are not AI. Certainly Siri is not conscious in any way, just a smart collection of responses, but neither is it entirely deterministic in the way that something like Eliza is.1

This leads us to the second class of objection: "how can I debug it?" People want to be able to pause execution and inspect the state of variables, or to have some sort of log that explains exactly the decision tree that led to a certain outcome. Unfortunately machine learning simply does not work that way. Its results are what they are, and the only way to influence them is to flag which are good and which are bad.

This is where the confusion I mentioned above comes in. When these techniques are applied in a purely technical domain - in my case, enterprise IT infrastructure - the results are fairly value-neutral. If a monitoring event gets mis-classified, the nature of Big Data (yay! even more buzzwords!) means that the overall issue it is a symptom of will probably still be caught, because enough other related events will be classified correctly. If however the object of mis-categorisation happens to be a human being, then even one failure could affect that person’s job prospects, romantic success, or even their criminal record.

The black-box nature of AI & ML is where very great care must be taken to ensure that ML is a safe and useful technique to use in each case, in legal matters especially. The code of law is about as deterministic as it is possible to be; edge cases tend to get worked out in litigation, but the code itself generally aims for clarity. It is also mostly easy to debug: the points of law behind a judicial decision are documented and available for review.

None of these constraints apply to ML. If a faulty facial-recognition algorithm places you at the heart of a riot, it’s going to be tough to explain to your spouse or boss why you are being hauled off in handcuffs. Even if your name is ultimately cleared, there may still be long-term damage done, to your reputation or perhaps to your front door.

It’s important to note that, despite the potential for draconian consequences, the law is actually in some ways a best case. If an algorithm kicks you off Google and all its ancillary services (or Facebook or LinkedIn or whatever your business relies on), good luck getting that decision reviewed, certainly in any sort of timely manner.

The main fear that we should have when it comes to AI is not "what if it works and tries to enslave us all", but "what if it doesn’t work but gets used anyway".

Photo by Ricardo Gomez Angel via Unsplash

Yes, it is noticeable that all of these personifications of AI just happen to be female. ↩

This is a surprisingly practical setup. In this shot (taken with my iPad, because using the phone would have required a mirror) I am on a conference call while also reviewing some slides in PowerPoint. The AirPods mean I don’t get tangled up in wires, and the little stand means I have my hands free to drink coffee or whatever.

It’s not quite as good as doing it all on the iPad’s big screen, but the phone does get connectivity absolutely everywhere, and it’s much less of A Thing to set up in a café than an iPad, let alone a MacBook. I got a wifi only iPad, because I would use its cellular modem about once a month if that, and it’s simply not worth it. And for reasons best known to themselves, my phone provider doesn’t offer tethering as an option on any contract I would be remotely interested in.