Yesterday was the LinkedIn equivalent of a birthday on Facebook: a new job announcement. I am lucky enough to have well-wishers1 pop up from all over with congratulations, and I am grateful to all of them.

With a new job comes a new title – fortunately one that does not feature on this list of the most ridiculous job titles in tech (although I have to admit to a sneaking admiration for the sheer chutzpah of the Galactic Viceroy of Research Excellence, which is a real title that I am not at all making up).

The new gig is as Director, Field Initiatives and Readiness, EMEA at MongoDB.

Why there? Simply put, because when Dev Ittycheria comes calling, you take that call. Dev was CEO at BladeLogic when I was there, and even though I was a lowly Application Engineer, that was a tight-knit team and Dev paid attention to his people. If I have learned one thing in my years in tech, it’s that the people you work with matter more than just about anything. Dev’s uncanny knack for "catching lightning in a bottle", as he puts it, over and over again, is due in no small part to the teams he puts together around him – and I am proud to have the opportunity to join up once again.

Beyond that, MongoDB itself needs no presentation or explanation as a pick. What might need a bit more unpacking is my move from Ops, where I have spent most of my career until now, into data structures and platforms. Basically, it boils down to a need to get closer to the people actually doing and creating, and to the tools they use to do that work. Ops these days is getting more and more abstract, to the point that some people even talk about NoOps (FWIW I think that vastly oversimplifies the situation). In fact, DevOps is finally coming to fruition, not because developers got the root password, but because Ops teams started thinking like developers and treating infrastructure as code.

Between this cultural shift, and the various technological shifts (to serverless, immutable infrastructure, and infrastructure as code) that precede, follow, and go along with them, it’s less and less interesting to talk about separate Ops tooling and culture. These days, the action is in the operability of development practices, building in ways that support business agility, rather than trying to patch the dam by addressing individual sources of friction as they show up.

More specifically to me, my particular skill set works best in large organisations, where I can go between different groups and carry ideas and insights with me as I go. I’m a facilitator; when I’m doing my job right, I break information out of silos and spread it around, making sure nobody gets stuck on an island or perseveres with some activity or mode of thinking that is no longer providing value to others. Coming full circle, this fluidity in my role is why I tend to have fuzzy, non-specific job titles that make my wife’s eyes roll right back in her head – mirroring the flow I want to enable for everyone around me, whether colleagues, partners, or users.

It’s all about taking frustration and wasted effort out of the working day, which is a goal that I hope we can all get behind.

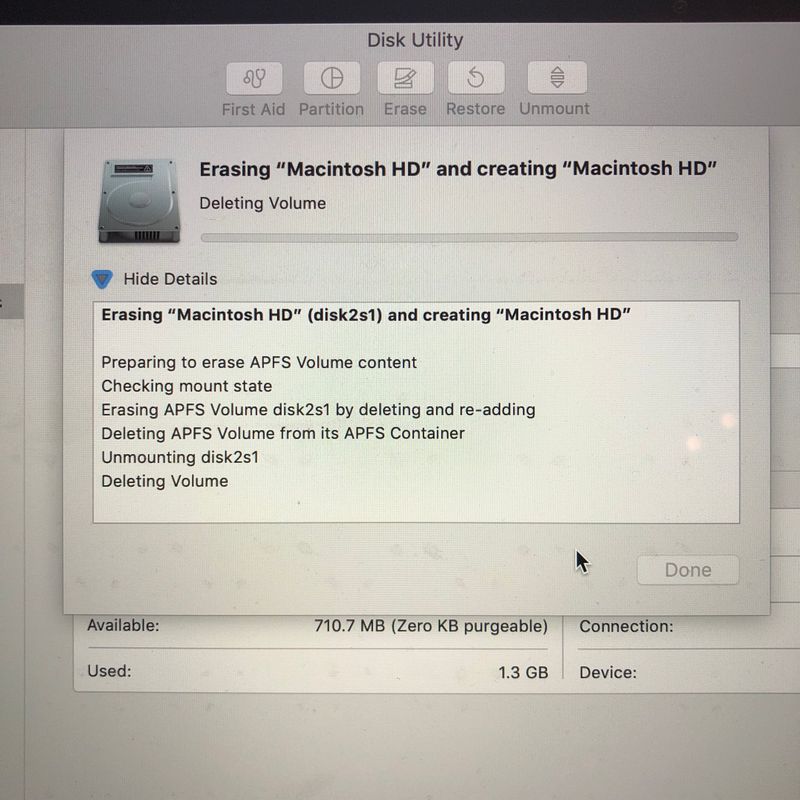

Now, time to blow away my old life…

-

Incidentally, this has been the first time I’ve seen people use the new LinkedIn reactions. It will be interesting to watch the uptake of this feature. ↩