During the ongoing process of getting back on the road and getting used to meeting people in three dimensions again, I noticed a few presenters struggling with displaying slides on a projector. These skills may have atrophied with remote work, so I thought it was time for a 2023 update to a five-year-old blog post of mine where I shared some tips and tricks for running a seamless presentation.

Two Good Apps

One tip that remains unchanged from 2018 is a super-useful (free) Mac app called Display Menu. Its original purpose was to make it easy to change display resolutions, which is no longer as necessary as it once was, but the app still has a role in giving a one-click way to switch the second display from extended to mirrored. In other words, you see the same on the projector as on your laptop display. You can also do this in Settings > Displays, of course, but Display Menu lives in the menu bar and is much more convenient.

Something else that can happen during presentations is the Mac going to sleep. My original recommendation of Caffeine is no longer with us, but it has been replaced by Amphetamine. As with Display Menu, this is an app that lives in the menu bar, and lets you delay sleep or prevent it entirely. It’s worth noting that entering presenter mode in PowerPoint or Keynote will prevent sleep automatically, but many people like to show their slides in slide sorter view rather than actually presenting1.

Two Good Techniques

If you are using the slide sorter view in order to be able to control your presentation better and jump back and forth, you really need to learn to use Presenter Mode instead. This mode lets you use one screen, typically your laptop's own, as your very own speaker's courtesy monitor, with a thumbnail view of the current and next slides, as well as your presenter notes and a timer. Meanwhile all the audience sees is the current slide, in full screen on the external display. You can also use this mode to jump around in your deck if needed to answer audience questions — but do this sparingly, as it breaks the thread of the presentation.

My original recommendation to set Do Not Disturb while presenting has been superseded by the Focus modes introduced with macOS Monterey. You can still just set Do Not Disturb, but Focus has the added intelligence of preventing notifications only until the end of the current calendar event.2 However, you can also create more specific Focus modes to fit your own requirements.

A Nest Of Cables

The cable situation is much better than it was in 2018. VGA is finally dead, thanks be, and although both HDMI and USB-C are still out there, many laptops have both ports, and even if not, one adapter will cover you. Also, that single adapter is much smaller than a VGA brick! I haven't seen a Barco ClickShare setup in a long time; I think everyone realised they were cool, but more trouble than they were worth. Apple TVs are becoming pretty ubiquitous — but do bear in mind that sharing your screen to them via AirPlay will require getting on some sort of guest wifi, which may be a bit of a fiddle. Zoom and Teams room setups have displaced WebEx almost everywhere, and give the best of both worlds: if you can get online, you can join the room's meeting, and take advantage of screen, camera, and speakers.

Remote Tips

All of those recommendations apply to in-person meetings when you are in the room with your audience. I offered some suggestions in that older piece about remote presentations, but five years ago that was still a pretty niche pursuit. Since 2020, on the other hand, all of us have had to get much better at presenting remotely.

Many of the tips above also apply to remote presentations. Presumably you won't need to struggle with cables in your own (home) office, but on the other hand you will need to get set up with several different conferencing apps. Zoom and Teams are duking it out for ownership of this market, with Google Meet or whatever it's called this week a distant third. WebEx and Amazon Chime are nowhere unless you are dealing with Cisco or Amazon respectively, or maybe one of their strategic customers or suppliers. The last few years have seen an amazing fall from grace for WebEx in particular.

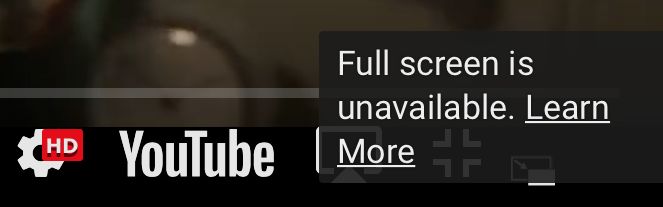

Get Zoom and Teams at least set up ahead of time, and if possible do a test meeting to make sure they are using the right audio and video devices and so on. Teams in particular is finicky with external webcams, so be ready to use your built-in webcam instead. If you haven't used one of these tools before and you are on macOS Monterey, remember that you will need to grant it access to the screen before you can share anything — and when you do that, you will need to restart the app, dropping out of whatever meeting you are in. This is obviously disruptive, so get this setup taken care of beforehand if at all possible.

Can You See Me Now?

On the topic of remote meetings, get an external webcam, and set it up above a big external monitor — as big as you can accomodate in your workspace and budget. The webcam in your laptop is rubbish, and you can't angle it independently from the display, so one or the other will always be wrong — or quite possibly both.

Your Mac can also now use your iPhone as a webcam. This feature, called Continuity Camera, may or may not be useful to you, depending on whether you have somewhere to put your phone so that it has a good view of you — but it is a far better camera than what is in your MacBook's lid, so it's worth at least thinking about.

I Can See You

Any recent MacBook screen is very much not rubbish, on the other hand, but it is small, and once again, hard to position right. An external display is going to be much more ergonomic, and should be paired with an external keyboard and mouse. We all spend a lot of time in front of our computers, so it's worth investing in our setups.

Apart from the benefits of better ergonomics when working alone, two separate displays also help with running remote presentations, because you can set one to be your presenter screen and share the other with your audience. You can also put your audience's faces on the screen below the webcam, so that you can look "at" them while talking. Setting things up this way also prevents you from reading your slides — but you weren't doing that anyway, right? Right?

I hope some of these tips are helpful. I will try to remember to share another update in another five years, and see where we are then (hint: not the Metaverse). None of the links above was sponsored, by the way — but if anyone has a tool that they would like me to check out, I'm available!

🖼️ Photos by Charles Deluvio and ConvertKit on Unsplash; Continuity Camera image from Apple.